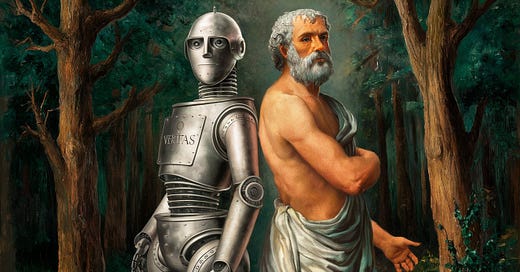

Socrates and the Stoics Want Us to Be Like This AI

What an LLM Just Taught Us About Being Better Humans

People are cheering because a study1 found AI chat sessions reduced conspiracy theory belief by 21%.

Twitter got all utopia is nigh about it. Yay, the LLMs will save us from ourselves, etc.

I was excited for a different reason: the demonstration that humanity isn’t a lost cause. I’ll wait for replication before I’m fully convinced, but this is heartening if you care about humanity and doing good.

The dominant narrative is that you can’t use facts to root out false beliefs. Logic can’t contend with the vast array of delusional cognitive biases we’re prone to, nor the seductive stories leading us astray. Some people may be amenable to reason, but the rest are stuck in a jumble of nonsensical cognitive loops with a good dose of vice mixed in.

In short, there’s nothing you can do about crackpot coworkers. Your family is a lost cause. Retreat into your bubble and hunker down.

That view is untenable if a quick 30-minute session with an LLM2 reduces conspiracy beliefs by 21% for at least two months. Even the deluded may be subject to reason. Those valuing truth have dropped the ball by viewing much of humanity as a lost cause.

The Stoic Approach to Ignorance

Overwhelmed with a torrent of greedy, irrational, and ignorant people seeking his attention, the Roman Emperor and philosopher Marcus Aurelius mused in his journal that, “People exist for one another. Teach them or bear with them3.”

He didn’t offer the option I’ve internally wrestled with: get angry that they’re gullible and incapable of coherent thought.

When I was young and stupid I sometimes tried to convince people of things I had convictions about but which they didn’t believe. I identified with these beliefs and wanted to spread them; I usually failed. I suspect Marcus experienced something similar.

People being rational is outside our control, and pinning our happiness on them becoming rational a recipe for misery. But Stoics care about humanity and want to do good. That we can help the world move away from ignorance is good news.

It’s noteworthy that the AI didn’t try to convince participants of any particular positive claim. It merely pointed out their beliefs weren’t compatible with a reasonable assessment of the facts.

Besides the 21% of participants who changed their minds about their conspiracy theory, more than a quarter (27%) became uncertain about it, which might be the bigger win.

An Attitude of Self-Doubt

I’ve worked on keeping most labels out of my identity for the last ten years. I can’t think critically about what I identify with, so each thing I let in leaves me stupider and more error-prone.

Instead of beliefs, I prefer lightly-held working hypotheses that are always up for reconsideration. With this framing of reality, I feel lighter as I navigate life, and less defensive when confronted with opposing views.

Since it’s impossible to be well-informed about everything, it’s a relief to admit ignorance and refuse to have a firm opinion about things, even when people push you to.

"You always own the option of having no opinion. There is never any need to get worked up or to trouble your soul about things you can't control. These things are not asking to be judged by you. Leave them alone." — Marcus Aurelius, Meditations 6.52

This makes it easier to do as Marcus suggests: “…enter others’ minds and let them enter yours4.”

When I open my mind to someone I suspect has an incorrect belief, should I model being closed-minded and resistant to contradicting facts, or curious and interested in the truth above all else? How can I do that if I start off angry?

The Socratic Approach To Truth

I love Socrates’s approach. When told he was the wisest man in Athens by the Oracle of Apollo he concluded it was only so because he recognized how ignorant he was.

Socrates rarely made positive claims, but merely pointed out when someone’s confident assertions weren’t supported by reason by asking the right questions. Socrates is a bit of a saint in Plato and Xenophon’s depictions, never losing his temper no matter how obtuse or immoral his interlocutors are.

This “via negativa,” approach suggests we’ll get closer to the truth by identifying what something isn’t than by asserting what it is. It’s knowledge generated by subtraction. We take a step toward wisdom by stepping back from certainty.

A Roadmap For Changing Minds

If you really care about promoting truth and helping humanity but throw up your hands in frustration at stupid people, you should consider this study a rebuke. Not everyone is a lost cause. We can help people if we take the right approach, and if you’re committed to justice, this is necessary.

Perhaps most importantly, we can’t start with the assumption that our interlocutors have nothing to teach us. Respect their ideas and find areas of common ground.

“If anyone can refute me—show me I’m making a mistake or looking at things from the wrong perspective—I’ll gladly change. It’s the truth I’m after, and the truth never harmed anyone. What harms us is to persist in self-deceit and ignorance.”

― Marcus Aurelius, Meditations, 6.21

If someone insists cows are being abducted by aliens, I’ll probably spend a couple minutes geeking out about the Fermi paradox with them. Conspiracy wonks often have interesting points that are worth considering mixed in with their delusions.

If you’re unsure how to engage with someone you suspect holds false beliefs, start by attempting to understand them.

Listen closely and paraphrase their points to demonstrate respect and understanding.

Once they’ve stated their case, ask some questions.

Power Questions:

“How certain are you of this on a 1-100% scale?” (Assess at the start and end of the conversation)

What sort of evidence would it take for you to change your view?

These questions will give you a lot of info about the sort of person/belief you’re dealing with. If they say nothing will change their mind, or set the level of evidence so high that it would be almost impossible to achieve, then you’re probably wasting your time. Some people will never change their mind no matter the evidence.

Your goal should be to reduce their confidence in the false belief by pointing out contradictions and errors of reasoning. Do not try to convince them that a particular thing is true. Just point out that the evidence doesn’t support their belief.

If you can drive them below 50% certainty, I’d consider that a huge success. It’s almost impossible to prove a negative, but it’s often trivially easy to demonstrate that underlying assumptions are shaky.

If in doubt, reading Plato is a good start. Ward Farnsworth’s The Socratic Method is an excellent introduction to the sort of questions that help (and hurt) such discussions, and how you can use the Socratic method to investigate yourself and find clarity in your thinking.

Where AI Has Us Beat

Everything the AI did in this study can be replicated by well-intentioned humans willing to put in some effort.

But there may be two areas where the AI can outcompete us.

First, the AI will never grow exasperated by the stubborn blindness or poor reasoning of whoever it’s talking to. I suspect a good deal of its success lies here.

Second, we all have limited time and attention, and the conspiratorially minded are often obsessed with the minutiae of their subjects. It’s their hobby, and some have a nearly endless supply of claims that would take you ages to sort through.

In this study, the LLMs drew on the sum of human knowledge (or at least most of it). That’s quite the resource, and more than any human can have in their brain.

So perhaps the spread of AI will do more to cut down on human ignorance than actual humans ever will. But that assumes that humans will seek out the right sort of AI to disabuse them of their errors.

While we wait for this utopia to arrive, maybe strike up a conversation with that adorable crackpot in your life.

You might just change their mind, and maybe yours too.

Costello, T. H., Pennycook, G., & Rand, D. G. (2024, April 3). Durably reducing conspiracy beliefs through dialogues with AI.

The researchers had the participants chat with GPT-4 Turbo.

Marcus Aurelius, Meditations, 8.59

Marcus Aurelius, Meditations 8.61

Very good article. And with prsctical hints to deal with stuborn peop66

You said “Yay, the LLMs will save us from ourselves”. Yes, I have that hope also. Especially when I look at how easily people fall for lies, scams, conspiracy theories these days.

Psychologist Daniel Kahneman (who recently passed away) describes two types of thinking:

“System 1” is thinking we do subconsciously based on our observing patterns in the world and using the massive parallel processing in our brains to predict what might come next. It is intuitive and very fast way of thinking that we use most all the time, but it can be wrong as this way of thinking can easily be mislead or even manipulated.

The other way of thinking that we humans do is called “System 2” and it occurs in our consciousness. We use logic and math applied to facts to reach conclusions that are more likely correct, assuming we do it right.

In the “Durably reducing conspiracy beliefs through dialogues with AI” paper, the authors wrote conspiracy theories “primarily arise due to a failure to engage in reasoning, reflection, and careful deliberation. Past work has shown that conspiracy believers tend to be more intuitive and overconfident than those who are skeptical of conspiracies.”

It is System 1 thinking that results in conspiracy beliefs.

The paper finds that GPT-4 Turbo used “reasoning-based strategies …evidence-based alternative perspectives were used ‘extensively’ in a large majority of conversations”.

This is System 2 thinking that this top rated LLM is using to change the human’s beliefs.

Sam Altman, head of OpenAI, says the goal for GPT-5 that they are working on now is to be even better at System 2 reasoning.

Meanwhile, humans are not evolving very fast, so they will continue to use System 1 for the majority of its decisions. But some humans will turn to these “reasoning” AIs to help them make good decisions in the future.

Will this result in two types of humans?

I use the term “Homo Mythos” to describe the vast majority of humans who make decisions based on myths, superstitions, faulty reasoning, etc. That is System 1 thinking.

I reserve “Homo Sapiens” to describe those humans who are “wise” (“sapien” is the Latin word). They use System 2 reasoning as much as possible. And soon with the rise of AIs that may be able to reason better than humans, they will come to rely on these AIs for most all decisions.

Hopefully those decisions made by AIs will be good for us humans.